Yoichi Ishibashi

Japanese/石橋陽一 he/him

I am a researcher at NEC Corporation (Data Science Research Laboratories, Generative AI Group). My research interests lie in coding agents and self-improving LLMs. I believe the most economically valuable application of AI is automating technological development itself, and I have been conducting research toward this goal. In recent years, I have worked on:

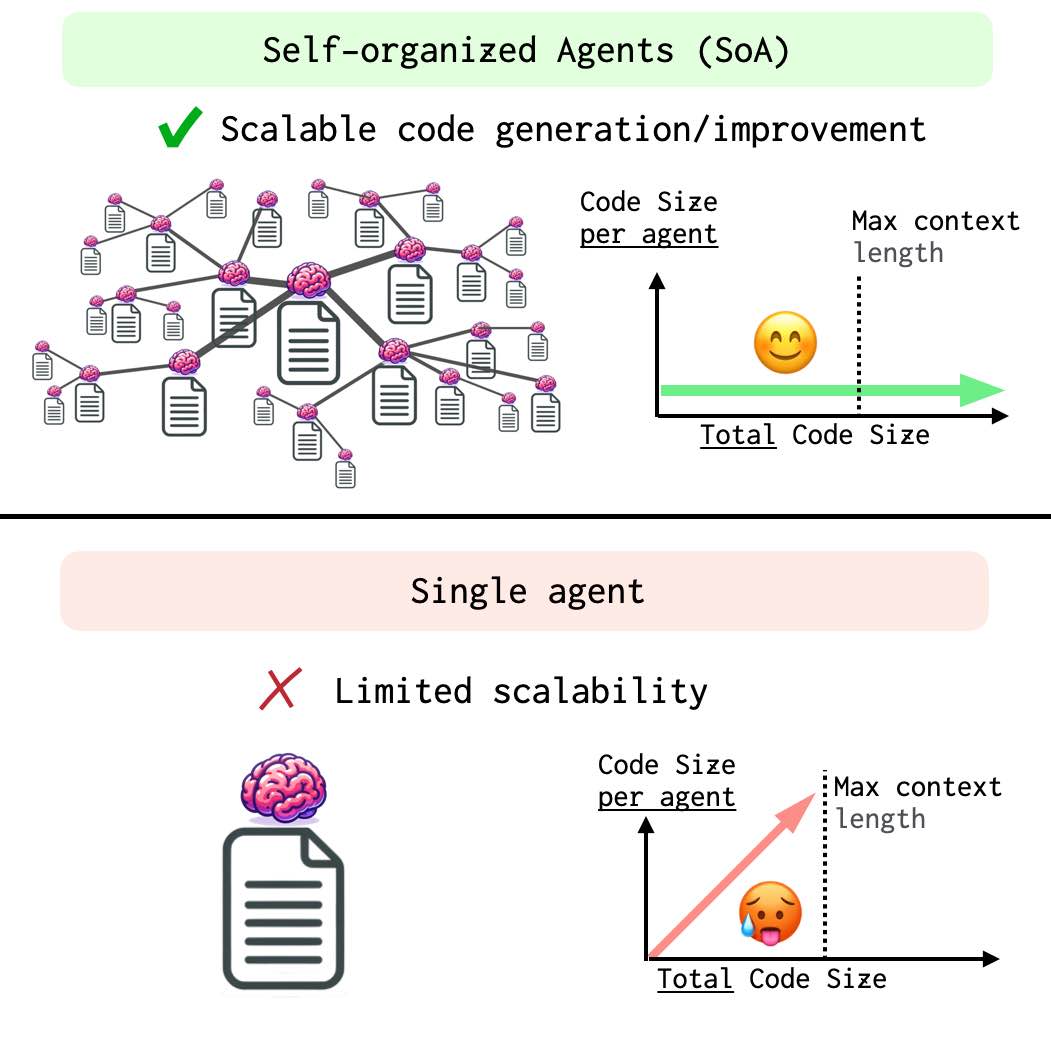

- a scalable multi-agent system that distributes context to address context length limitations

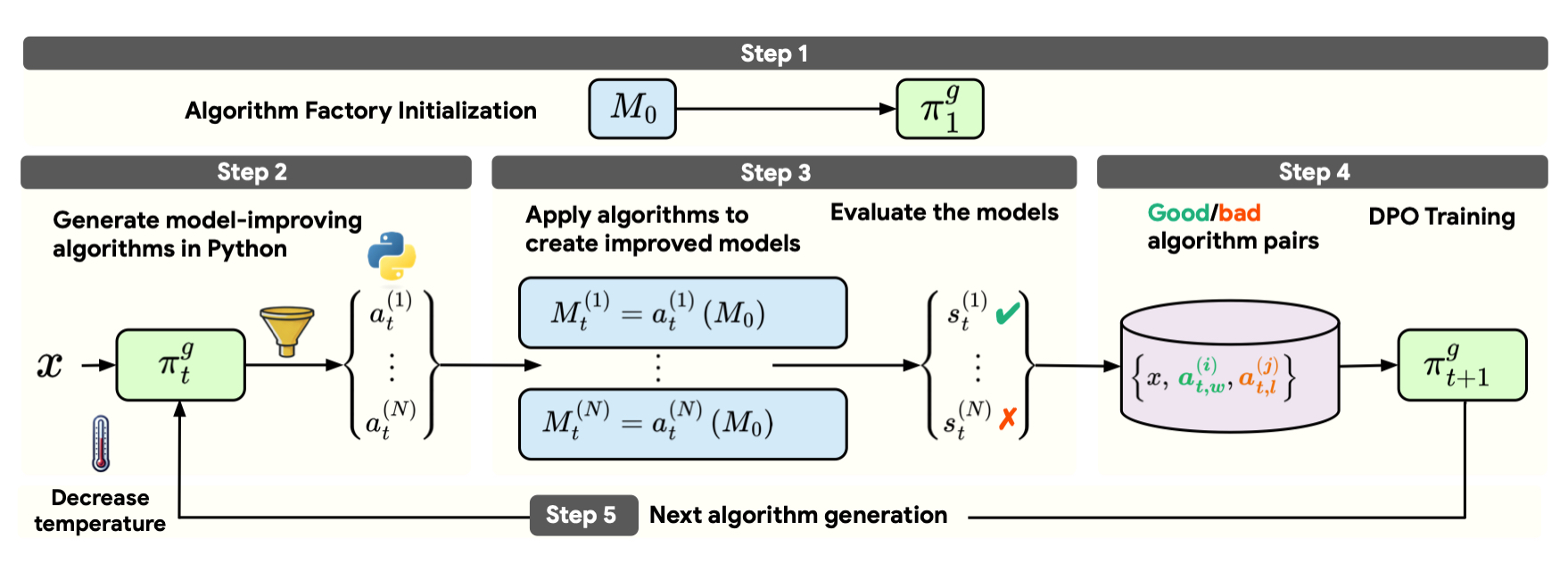

- a training method where LLMs iteratively discover algorithms and use reinforcement learning to find superior ones (NAACL 2025)

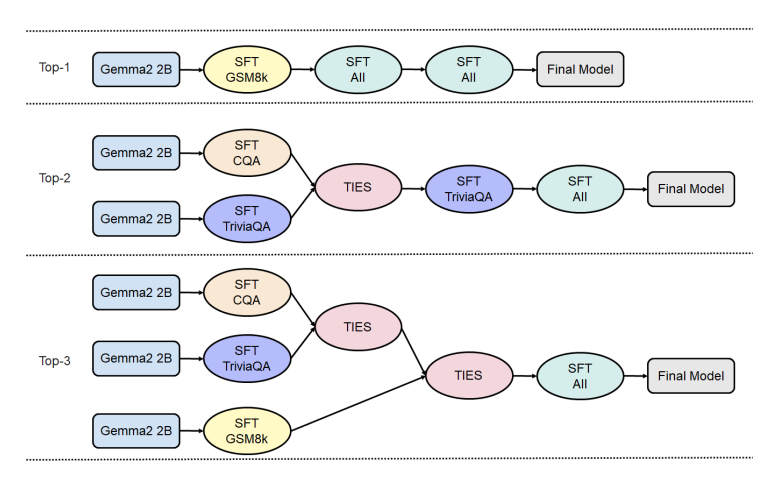

- automation of LLM development pipelines including SFT and merging (EMNLP 2025)

- a domain-agnostic method for training reasoning capabilities during pre-training using arbitrary text

I graduated with the highest honors from Kyoto Sangyo University and received my Master’s (2020) and Ph.D. (2023) from NAIST. After a one-year postdoctoral position at Kyoto University (2023–2024), I joined my current position.

For more details, please refer to my CV.

News

| Nov 09, 2025 | Our paper LaMDAgent: An Autonomous Framework for Post-Training Pipeline Optimization via LLM Agents has been accepted to EMNLP 2025 🎉 |

|---|---|

| May 22, 2025 | Our paper Mining Hidden Thoughts from Texts: Evaluating Continual Pretraining with Synthetic Data for LLM Reasoning is available on arXiv. |

| Feb 13, 2025 | Our paper Can Large Language Models Invent Algorithms to Improve Themselves? has been accepted to NAACL 2025🎉 |

| Oct 22, 2024 | Our paper Can Large Language Models Invent Algorithms to Improve Themselves? is available on arXiv. |

| Mar 14, 2024 | Our paper Subspace Representations for Soft Set Operations and Sentence Similarities has been accepted to NAACL 2024🎉 |